English

English

Comprehensive Transformer Test Types for Enhanced Performance

Understanding Transformer Test Types

Transformers have revolutionized the field of natural language processing (NLP) and machine learning, but evaluating their performance is crucial for ensuring they meet the desired standards. Various test types are employed to assess transformers, each focusing on different aspects of their capabilities.

1. Performance Testing Performance testing typically involves evaluating a transformer model based on metrics like accuracy, precision, recall, and F1 score. These metrics help determine how well the model performs on specific tasks, such as text classification, translation, and summarization. Performance testing is vital for understanding the effectiveness of the model in real-world applications and for comparing it to other models.

Understanding Transformer Test Types

3. Adversarial Testing This type of testing involves creating input data designed to deceive the model, checking its resilience against malicious or unexpected inputs. By subjecting transformers to adversarial examples, developers can identify vulnerabilities and work on strengthening the model's defenses.

transformer test types

4. A/B Testing A/B testing is a method often used in product development and marketing. In the context of transformers, it involves comparing two versions of a model (A and B) to see which performs better on specific tasks. This iterative testing helps in fine-tuning the model based on user feedback and behavior, ensuring a more user-friendly experience.

5. Robustness and Bias Evaluation This type of testing aims to uncover any inherent biases in the model, especially regarding sensitive attributes like gender, race, and ethnicity. By analyzing the model's outputs across various demographic groups, researchers can address and mitigate biases, ensuring fairer and more equitable AI systems.

6. Generalization Testing Generalization tests evaluate how well a transformer models generalize from training data to unseen data. They measure the model's ability to apply learned knowledge to novel situations, which is essential for robust real-world applications.

In conclusion, transformer test types encompass a wide range of evaluations, each vital for ensuring the models' reliability, effectiveness, and fairness. As transformers continue to evolve, ongoing testing will be crucial to meet the demands and complexities of modern NLP challenges.

-

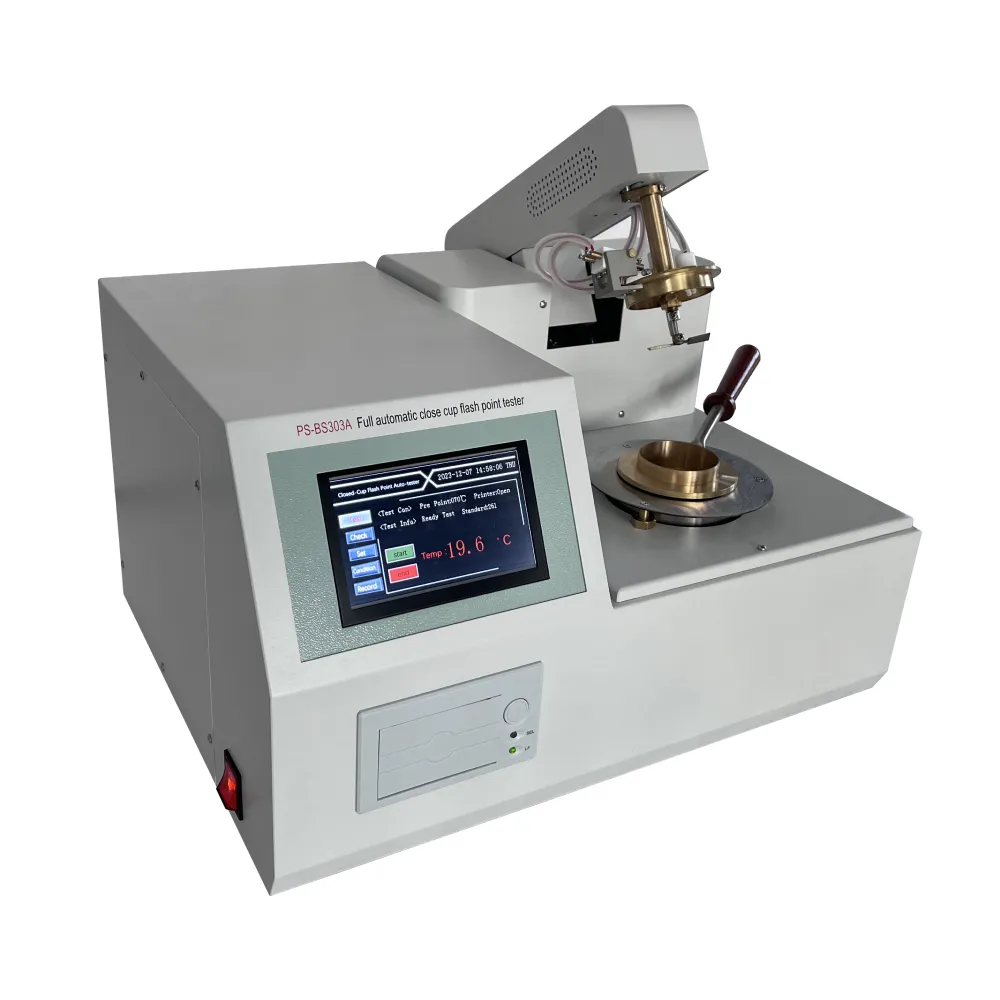

Differences between open cup flash point tester and closed cup flash point testerNewsOct.31,2024

-

The Reliable Load Tap ChangerNewsOct.23,2024

-

The Essential Guide to Hipot TestersNewsOct.23,2024

-

The Digital Insulation TesterNewsOct.23,2024

-

The Best Earth Loop Impedance Tester for SaleNewsOct.23,2024

-

Tan Delta Tester--The Essential Tool for Electrical Insulation TestingNewsOct.23,2024