English

English

transformer ttr

Understanding the Transformer Architecture in Natural Language Processing

The Transformer architecture has revolutionized the field of Natural Language Processing (NLP) since its introduction in the seminal paper Attention is All You Need by Vaswani et al. in 2017. Unlike traditional sequence-to-sequence models that rely heavily on recurrent neural networks (RNNs) and convolutional neural networks (CNNs), the Transformer architecture is built on the premise of self-attention mechanisms, making it significantly more efficient and powerful for various NLP tasks.

Understanding the Transformer Architecture in Natural Language Processing

The architecture consists of multiple layers of encoders and decoders stacked on top of each other, which enhances the model's capability to learn intricate patterns. Each encoder layer applies self-attention followed by feed-forward neural networks, while each decoder layer also includes a masked version of self-attention, ensuring that the predictions for a particular word only depend on the words preceding it.

transformer ttr

Furthermore, the Transformer employs position encoding to incorporate the order of words, as self-attention itself does not preserve sequence information. This positional information is crucial for understanding the structure of sentences, enabling the model to maintain the context necessary for accurate language processing.

In recent years, variations of the original Transformer architecture have emerged, exemplified by models such as BERT, GPT, and T5. These models have pushed the boundaries of what is achievable in tasks like machine translation, text summarization, sentiment analysis, and question-answering. By pretraining on vast amounts of textual data followed by fine-tuning on specific tasks, these models have set new benchmarks in the NLP realm, showcasing the transformative power of the Transformer framework.

In conclusion, the transformation brought about by the Transformer architecture is a testament to the ongoing evolution in AI and NLP. Its ability to process language more efficiently and effectively has opened up new avenues for research and application, making it a cornerstone of modern language models. As exploration continues, the potential for even more advanced models and applications seems limitless, promising a future where machines can understand and generate human language with increasing sophistication.

-

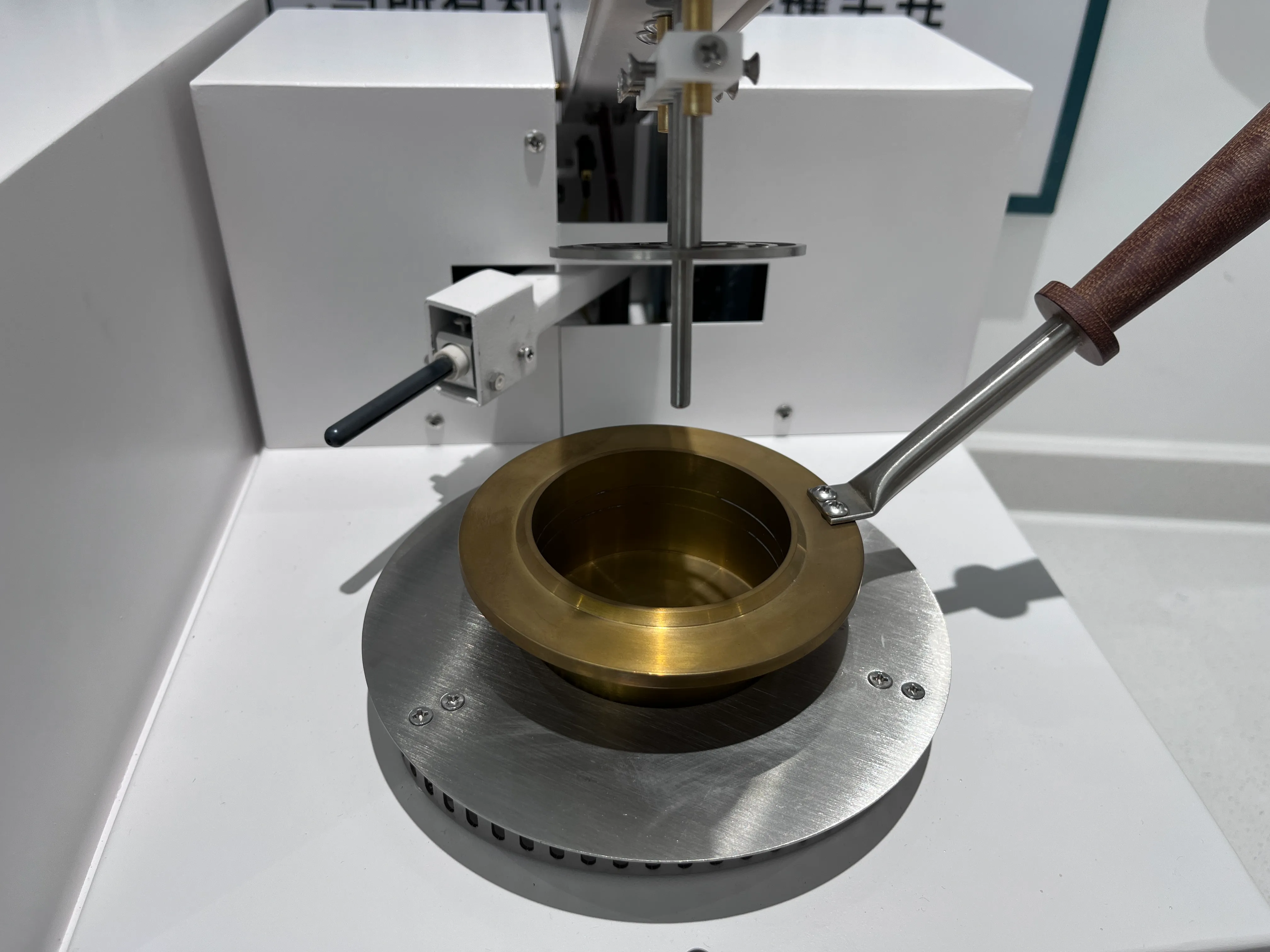

Differences between open cup flash point tester and closed cup flash point testerNewsOct.31,2024

-

The Reliable Load Tap ChangerNewsOct.23,2024

-

The Essential Guide to Hipot TestersNewsOct.23,2024

-

The Digital Insulation TesterNewsOct.23,2024

-

The Best Earth Loop Impedance Tester for SaleNewsOct.23,2024

-

Tan Delta Tester--The Essential Tool for Electrical Insulation TestingNewsOct.23,2024